Table of Contents

Information

AI-Driven Captioning Workflows: From ChatBot to Agentic Automation

Integrating OpenAI Agents and MCP Servers into Media Production Pipelines

By: Tim Taylor

Last Updated: December 11, 2025

As media supply chains become increasingly automated, the ability to programmatically process closed captions and subtitles has evolved from a convenience to a critical infrastructure requirement. At Closed Caption Creator Inc., we've taken our first steps toward integrating AI reasoning capabilities into production workflows—moving beyond traditional rule-based automation to context-aware, conversational interfaces that can understand intent and generate precise configuration parameters.

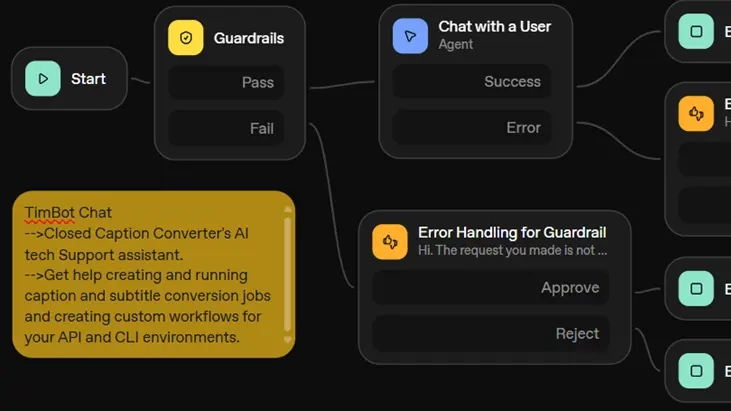

This article examines our implementation of TimBot, an AI assistant built on OpenAI's Agent architecture, and outlines our roadmap toward developing a Model Context Protocol (MCP) server that will enable third-party AI agents to leverage our caption conversion capabilities directly.

The Challenge: Bridging Domain Expertise and API Complexity

Closed Caption Converter supports 25+ subtitle formats including broadcast standards like SCC, MCC, EBU-STL, and streaming formats like WebVTT and TTML. Our API exposes hundreds of configuration parameters spanning frame rate conversion, timecode conformance, positional mapping, character encoding, and format-specific compliance requirements.

For experienced broadcast engineers, this granular control is essential. However, the learning curve presents challenges:

- New users struggle to translate high-level requirements ("convert SCC to WebVTT for web delivery") into specific API parameters

- Developers integrating caption processing into larger workflows need to understand nuanced broadcast standards

- Format-specific edge cases and compliance requirements aren't always intuitive from documentation alone

We recognized an opportunity to use conversational AI to serve as an intelligent interface layer—translating natural language requirements into validated JSON presets while surfacing domain expertise accumulated over 45 years in the industry.

Architecture: Building TimBot with OpenAI Agents

TimBot was developed using OpenAI's Agent framework, which provides structured reasoning capabilities through function calling and retrieval-augmented generation (RAG). The system architecture consists of several key components:

Guardrails and Input Validation

All user inputs pass through a security and scope validation layer that filters for malicious patterns and redirects off-topic queries. TimBot is intentionally scoped to caption conversion workflows—requests outside this domain are politely declined with guidance toward appropriate resources. This prevents model drift and ensures responses remain grounded in verified technical knowledge.

Agent Core with Structured Reasoning

The Agent component orchestrates interactions between the user query, knowledge base, and response generation. Key implementation details:

- Instruction Set: The agent is explicitly trained to search proprietary knowledge bases rather than generating speculative answers from general web knowledge. This reduces hallucination risk and ensures technical accuracy.

- Reasoning Effort: We balance response latency against reasoning depth. For straightforward format conversions, lower reasoning effort provides sub-second responses. Complex multi-step workflows requiring conditional logic trigger deeper reasoning passes.

- Follow-Up Protocol: Rather than making assumptions, the agent asks clarifying questions about frame rate standards (drop-frame vs. non-drop), timecode offsets, positioning requirements, and delivery specifications.

Vectorized Knowledge Store

TimBot's knowledge base contains:

- Technical specifications for each supported format

- Empirical use cases from production workflows (e.g., "How do I convert 608 captions to 708 while maintaining positioning?")

- Known edge cases and their solutions

- API parameter schemas for Web GUI, CLI, and REST API implementations

- Regulatory compliance requirements (e.g., FCC mandates, EBU technical standards)

This knowledge base is continuously expanded as new formats are supported and edge cases are identified in production environments. Notably, we restrict the agent from general web searches for dynamic content—the only whitelisted external references are authoritative regulatory bodies whose specifications change over time.

Error Handling and Epistemic Humility

A critical design principle: TimBot refuses to speculate. If a query cannot be answered with high confidence from the knowledge base, the system explicitly states this and directs users to technical support rather than providing a "best guess." This approach prioritizes accuracy over apparent helpfulness, which is essential in production environments where incorrect configuration parameters can result in compliance failures or rejected deliverables.

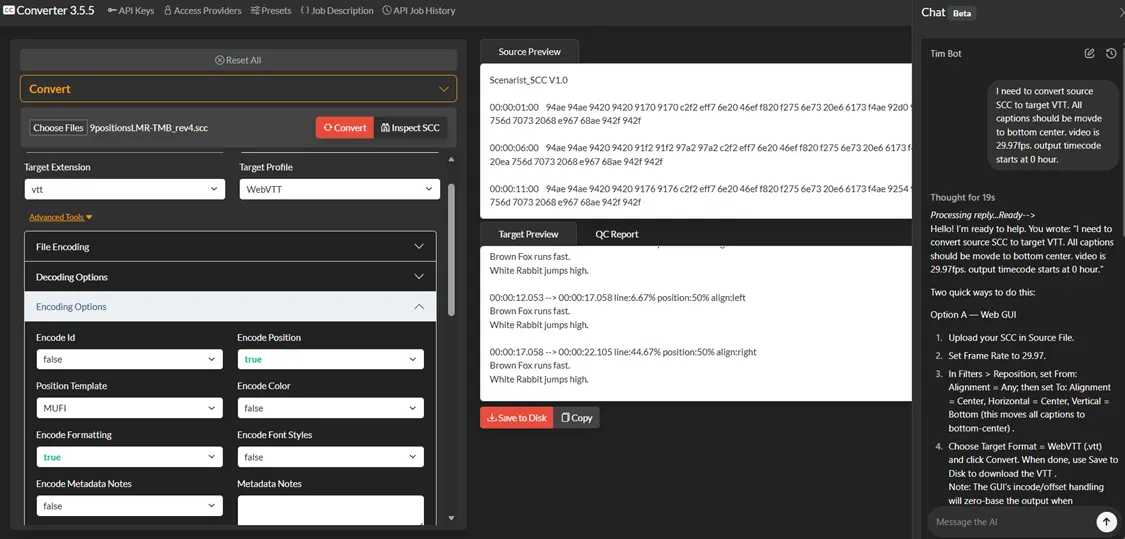

Example Workflow: Natural Language to API Configuration

Consider a typical user query:

"I need to convert source SCC to target VTT. All captions should be moved to bottom center. Video is 29.97fps. Output timecode starts at 0 hour."TimBot parses this requirement and generates a complete JSON preset for Web GUI, CLI, or API use:

{ "source_frameRate": 29.97, "target_frameRate": 29.97, "incode": "auto", "automatic_offset": true, "position": [{ "from": {"alignment": "any"}, "to": {"alignment": "center", "xPos": "center", "yPos": "end"} }], "source_profile": "scenerist", "target_profile": "webVtt"}

The agent then asks a follow-up question:

"Is your 29.97 video drop-frame? If yes, I'll confirm the DF setting before you run."This contextual awareness—understanding that 29.97fps often implies drop-frame timecode in NTSC broadcast contexts—demonstrates how domain-specific training enables more intelligent interactions than generic LLMs could provide.

Multi-Platform Support: Web GUI, CLI, and API

One of TimBot's key advantages is platform-agnostic preset generation. The same natural language query produces:

- Web GUI instructions: Step-by-step field configuration with visual guidance

- CLI presets: Complete JSON files ready for command-line execution

- API payloads: RESTful JSON configurations with proper form-data structure

This flexibility allows broadcast engineers to prototype workflows interactively in the GUI, then export validated configurations for automation in CI/CD pipelines or larger media asset management systems.

Lessons Learned: Production AI in Specialized Domains

Deploying TimBot revealed several insights about conversational AI in technical domains:

- Domain grounding is critical: Without strict knowledge base constraints, LLMs generate plausible-sounding but technically incorrect configurations. Our RAG implementation prevents this by forcing retrieval from verified sources.

- Users expect precision, not creativity: In creative writing, variability in AI responses can be desirable. In technical configuration, users need deterministic outputs. Our prompting emphasizes consistency and reproducibility.

- Iterative refinement works: Users treat TimBot like a colleague—asking follow-up questions, refining requirements, and testing configurations incrementally. This conversational approach reduces errors compared to monolithic documentation searches.

- Latency matters more than reasoning depth: For simple queries, sub-second responses enable fluid conversation. Users tolerate slightly longer responses (2-3 seconds) for complex multi-step workflows only when visibly indicated.

Future Direction: MCP Server for Agentic Workflows

While TimBot demonstrates the value of conversational AI for human users, we recognize that the next frontier lies in machine-to-machine integration. Our development roadmap includes building a Model Context Protocol (MCP) server that will expose our caption conversion capabilities to third-party AI agents and automation frameworks.

What is the Model Context Protocol?

MCP is an emerging standard for enabling AI agents to interact with external tools and services in a structured, discoverable way. Unlike traditional APIs that require manual integration, MCP servers provide self-describing interfaces that agents can query, understand, and utilize autonomously.

In practical terms, this means an AI agent orchestrating a video publishing workflow could:

- Discover that caption conversion capabilities exist

- Query available input/output formats and configuration parameters

- Invoke conversion operations with appropriate context

- Handle errors and edge cases programmatically

All without requiring developers to write explicit integration code.

Unlocking Agentic Media Workflows

An MCP-enabled caption conversion service enables several compelling automation scenarios:

- Intelligent Pipeline Orchestration: An AI agent managing video ingest could automatically detect caption file format mismatches and trigger conversions without human intervention. For example, receiving SCC files in a WebVTT-only delivery pipeline would trigger automatic conversion with format-appropriate parameters.

- Quality Assurance Automation: Agents could validate caption conformance against delivery specifications (frame rate accuracy, character limits, positioning compliance) and automatically reprocess files that fail validation—learning from patterns over time to predict likely issues.

- Multi-Platform Distribution: Content distribution systems could leverage the MCP server to generate platform-specific caption variants from a single master file—WebVTT for web players, SCC for broadcast, TTML for streaming platforms—each with appropriate formatting and compliance parameters.

- Natural Language Workflow Definition: Users could describe complex multi-step caption processing workflows in plain language, and an orchestration agent would break down requirements, call our MCP server for conversion steps, and coordinate with other services (transcoding, QC, delivery) to execute the complete pipeline.

The Broader Vision: AI in the Media Supply Chain

Our work with TimBot and the upcoming MCP server represents a broader shift in how media infrastructure will evolve. Rather than monolithic systems with rigid APIs, we're moving toward composable, agent-accessible services that can be dynamically orchestrated based on content requirements.

Consider a future workflow where a content producer simply describes their distribution requirements in natural language: "I need this video prepared for Netflix, YouTube, and broadcast delivery in Japan." An orchestration agent could:

- Query platform-specific requirements (resolution, codec, caption format)

- Invoke transcoding services for video processing

- Call our MCP server to convert captions to platform-appropriate formats

- Coordinate localization services for Japanese subtitles

- Validate deliverables against compliance specifications

- Execute delivery to each platform's CDN

This level of automation isn't theoretical—it's achievable when media services expose themselves through agent-friendly protocols like MCP.

Conclusion: From Experimentation to Production Infrastructure

TimBot represents our initial exploration of AI-driven interfaces for caption processing. By building conversational access to our technology, we've gained valuable insights about how technical users interact with AI assistants and what it takes to deploy reliable AI in production environments.

Our next step—developing an MCP server—will extend these capabilities beyond human users to enable true agentic automation. This positions our caption conversion technology as a composable service that can be dynamically integrated into diverse media workflows without manual intervention.

As the media industry continues its digital transformation, the ability to programmatically reason about content processing requirements and autonomously orchestrate technical operations will become essential infrastructure. We're excited to be at the forefront of this shift, building the tools that will power the next generation of automated media production.

To experience TimBot Chat or learn more about our upcoming MCP server development, visit https://closedcaptionconverter.com. For technical inquiries about API integration or early access to our MCP implementation, contact our team.